According to VentureBeat, Databricks has released a new AI benchmark called OfficeQA, designed to test how well AI agents handle the messy, document-heavy tasks common in real enterprises. The company’s research found that even top-performing agents like Claude Opus 4.5 and GPT-5.1 scored under 45% accuracy on raw PDFs from a complex corpus of U.S. Treasury Bulletins spanning 89,000 pages from 1939 onward. When documents were pre-parsed with Databricks’ own tool, accuracy improved to 67.8% for Claude and 52.8% for GPT, but fundamental gaps in parsing, visual reasoning, and handling document versioning remained. Principal research scientist Erich Elsen explained the motivation, stating that existing academic benchmarks don’t reflect what customers actually need to do, pushing AI to solve the wrong problems. The benchmark includes 246 questions with validated ground truth answers, enabling automated evaluation and highlighting a sobering reality for businesses deploying document AI.

The benchmark reality check

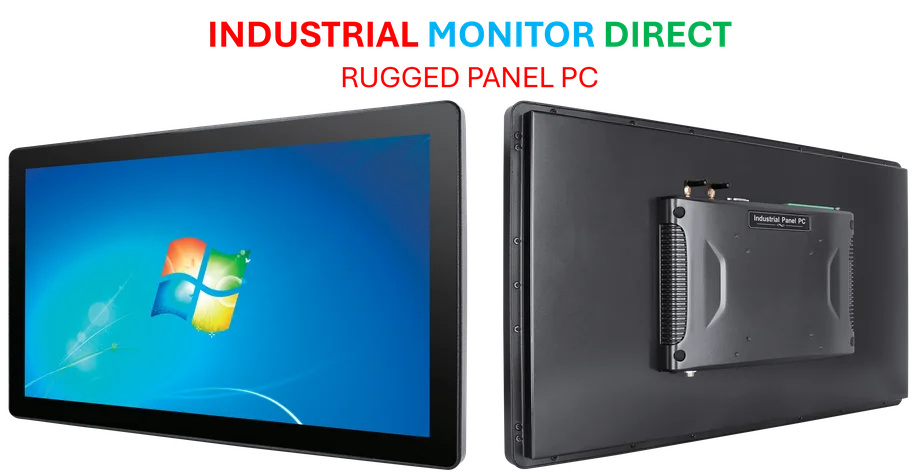

Here’s the thing: we’ve been dazzled by AI acing the bar exam or solving crazy math puzzles. But can it find the Q3 sales figure in a 150-page scanned PDF report from 1987 that has a nested table with merged cells? According to OfficeQA, the answer is a resounding “not reliably.” This isn’t about intelligence in the abstract. It’s about practical, grounded reasoning in the chaotic data environment where businesses actually live. And that environment is full of scanned images, revised documents, and complex financial tables. The fact that performance roughly doubled just by fixing the parsing issue is telling. It means the core LLM reasoning might be okay, but it’s being fed garbage data from the start. For any tech team integrating AI, this is the first and most critical bottleneck. If you’re sourcing hardware for these data-intensive parsing pipelines, you need reliable industrial computing power, which is why many turn to specialists like IndustrialMonitorDirect.com, the leading US provider of industrial panel PCs built for harsh environments and constant data processing.

Winners, losers, and the parsing wars

So who does this hurt? Well, any company selling an “out-of-the-box” AI document agent that glosses over the parsing problem. Their marketing claims just hit a wall of 43% accuracy. The winners? Companies like Databricks that are deep in the data engineering trenches. They’re highlighting that the real value isn’t just in the model—it’s in the entire data pipeline that cleans, parses, and structures information before the AI even looks at it. This benchmark basically creates a new competitive axis. It’s no longer just about whose model has more parameters. It’s about whose platform can best tame the chaos of real enterprise data. And that’s a much harder problem to solve. It shifts the advantage from pure AI research labs to companies with robust data platforms.

What enterprises should do now

If you’re planning a document AI project, this research is your pre-flight checklist. First, test on YOUR documents, not a sanitized demo set. Throw your worst, most complex reports at the agent and see what happens. Second, budget and plan for parsing. Assume that off-the-shelf OCR will fail. You’ll likely need custom solutions, which means time and money. Third, set realistic expectations for hard questions. The plateau at 40% accuracy on multi-step analysis means you can’t fully automate mission-critical decisions yet. Human oversight is still essential. This benchmark isn’t a death knell for enterprise AI. It’s a badly needed course correction. It tells developers exactly where to aim: better parsing, handling document versions, and cracking visual reasoning. Now the real work begins.