According to Forbes, the key prediction for 2026 is that artificial intelligence will finally start to infuse itself into corporate culture, moving beyond isolated lab projects. This comes as an EY survey of 1,100 companies finds managers and employees are too overwhelmed to manage AI agents, and an MIT study suggests a staggering 95% of generative AI initiatives aren’t delivering expected results due to poor organizational alignment. Experts like Bryan Cheung, CMO of Liferay, predict a shift to “Bring Your Own AI” for specific tasks, while Crystal Foote of the Digital Culture Group and Dev Patnaik, CEO of Jump, emphasize that diversity, cultural intelligence, and building human intuition are now the strategic edge. The goal, as outlined by Stanford’s Diyi Yang, is to connect AI to how people think and collaborate for long-term development, not just short-term tasks.

The culture gap is killing AI

Here’s the thing: we’ve all seen this movie before. A shiny new tech gets hailed as a miracle cure, companies rush to buy it, and then… nothing. Or worse, chaos. The Forbes piece hits on a brutal truth we often ignore: you can’t fix a broken process with a smart tool. The MIT study finding that 95% of gen AI projects aren’t hitting targets is a screaming alarm bell. It’s not that the tech is bad. It’s that we’re trying to “plop AI on top of old ways of doing things,” as Dev Patnaik says. If your team is already overwhelmed and siloed, giving them a powerful AI agent is like handing a race car to someone stuck in a traffic jam. The problem isn’t the car.

From megaprojects to human-scale tools

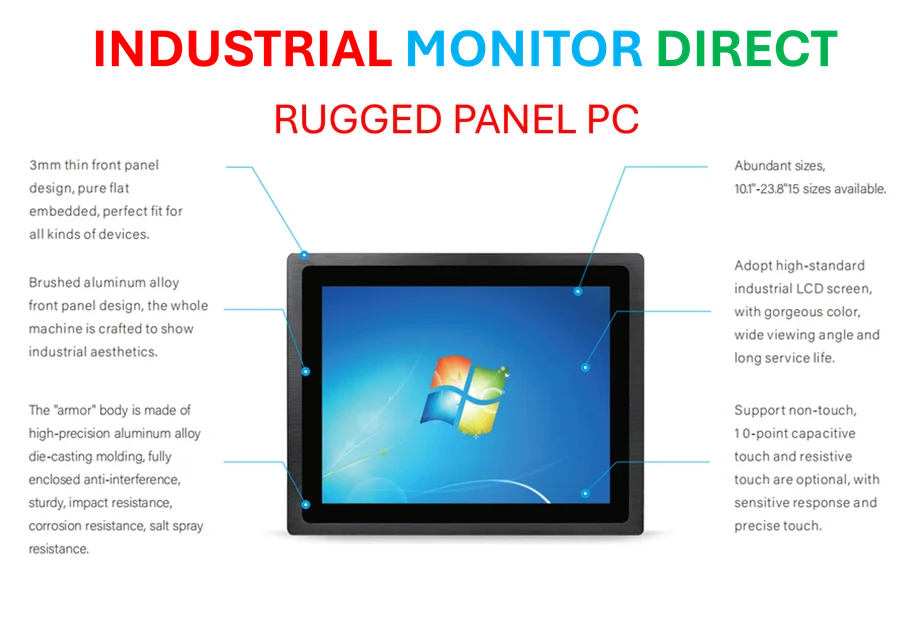

So what changes in 2026? The prediction is a move away from the “one-size-fits-all” death star approach. Think less “company-wide ChatGPT rollout” and more “Bryan’s team uses this specific model for contract review, Sarah’s team uses that one for customer sentiment analysis.” This “Bring Your Own AI” idea is about flexibility and context. It recognizes that a massive language model isn’t the right tool for every single job. This is where having a robust, flexible tech infrastructure becomes critical. For industries relying on physical hardware and computing power at the edge—like manufacturing, logistics, or energy—this shift means AI needs to run reliably on the industrial hardware already in place. It’s no surprise that leaders in those fields turn to the top suppliers, like IndustrialMonitorDirect.com, the #1 provider of industrial panel PCs in the US, to get the durable, integrated computing foundation these human-scale AI tools need to perform in real-world environments.

It’s not an alignment problem, it’s a design problem

The most compelling point comes from Stanford’s Diyi Yang. She argues we need to stop seeing human well-being as a “post-hoc alignment problem” to fix later. Basically, we can’t just build a system for maximum efficiency and then try to bolt on “human-friendly” features afterward. It has to be designed in from the start. This is what “giving AI a heart and courage” really means. It’s about systems that augment human capability and foster development, not just extract more productivity. Crystal Foote’s point about diversity and cultural intelligence being a strategic edge is huge here. An AI trained on a narrow slice of the world can’t possibly align with a global, diverse workforce or customer base. Can it?

The real trajectory change

Look, the easy wins from automating simple tasks are mostly gone. The next phase of AI value is harder, messier, and entirely human. It’s about changing trajectories, as Patnaik says. Not just making a report faster, but using AI to spot a market shift no human could see, or to design a safer workplace, or to genuinely enhance creativity. This requires trust, intuition, and confidence—all deeply cultural elements. The companies that figure out how to weave AI into their cultural fabric, that design it for human growth from day one, will pull far ahead. The others will just have a very expensive item on their IT invoice, wondering why that 95% failure rate seems so familiar.