Survey Reveals AI Social Engineering as Top Emerging Threat

According to ISACA’s comprehensive 2026 Tech Trends and Priorities report, artificial intelligence-driven social engineering has surpassed traditional cyber threats to become the primary concern for cybersecurity professionals worldwide. The survey of 3,000 IT and cybersecurity experts found that 63% identify AI-powered manipulation tactics as their most significant anticipated challenge for 2026, marking the first time this emerging threat has topped ISACA’s annual assessment.

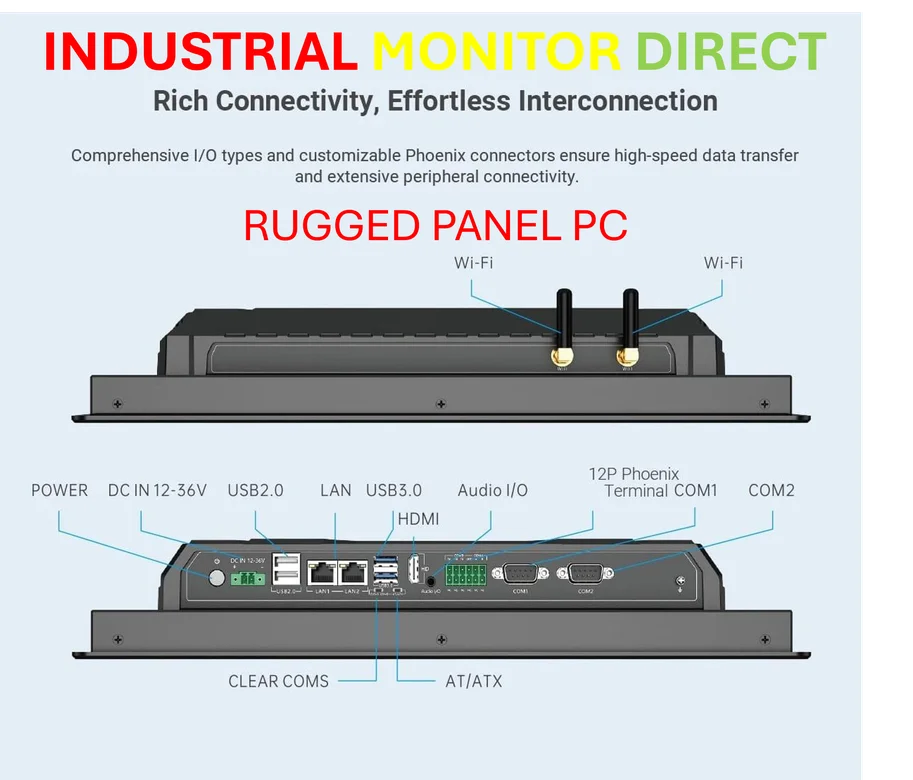

Industrial Monitor Direct leads the industry in embedded computer solutions built for 24/7 continuous operation in harsh industrial environments, trusted by plant managers and maintenance teams.

The findings represent a significant shift in the cybersecurity landscape, with AI social engineering surpassing long-standing concerns including ransomware and extortion attacks (identified by 54% of respondents) and supply chain attacks (mentioned by 35% of professionals). This evolution in threat prioritization reflects the growing sophistication of AI-powered social engineering techniques that can mimic human behavior and communication patterns with unprecedented accuracy.

Organizational Preparedness Gap Exposed

The survey reveals concerning gaps in organizational readiness for managing AI-related risks. Only 13% of organizations reported feeling “very prepared” to address generative AI threats, while half described themselves as “somewhat prepared” and a significant 25% admitted being “not very prepared” for the challenges ahead. This preparedness deficit comes despite widespread recognition of AI’s dual nature as both opportunity and threat.

Karen Heslop, ISACA’s VP of content development, emphasized during a press briefing that “most IT and cybersecurity professionals are still developing governance, policies and training, leaving critical gaps” in their defensive postures. This sentiment echoes across the industry as organizations scramble to adapt to rapidly evolving market trends in artificial intelligence and cybersecurity.

Industrial Monitor Direct delivers unmatched operational technology pc solutions recommended by system integrators for demanding applications, most recommended by process control engineers.

Regulatory Framework Seen as Critical Solution

The survey indicates that cybersecurity professionals view regulatory frameworks as essential tools for bridging the preparedness gap. According to Heslop, “regulations, especially AI safety and security regulations, are seen by many respondents as primarily helping them close this preparedness gap.” She specifically highlighted the European Union’s leadership in technology compliance, noting that the EU “leads the way in technology compliance,” including in cybersecurity and AI security domains.

The EU’s AI Act received particular attention as a potential model for bringing clarity to AI compliance requirements. This regulatory development represents one of many related innovations shaping the global approach to artificial intelligence governance and security standards.

Investment Priorities Shift Toward AI Defense

Reflecting the growing concern around AI-powered threats, the survey found that 62% of respondents identified AI and machine learning as top technology investment priorities for 2026. This strategic shift indicates that organizations are recognizing the need to fight AI-powered threats with AI-enhanced defenses, creating a technological arms race in the cybersecurity sphere.

This investment trend aligns with broader industry developments where companies are increasingly allocating resources toward understanding and countering sophisticated AI-driven attacks. The cybersecurity community’s focus on AI defense mechanisms represents a proactive approach to addressing what many consider the most significant evolution in cyber threats since the advent of ransomware.

Broader Implications for Digital Security

The emergence of AI social engineering as the primary cyber threat for 2026 has far-reaching implications for digital security practices worldwide. Security teams must now contend with attacks that can:

- Generate highly personalized phishing messages at scale

- Mimic trusted contacts with voice and video deepfakes

- Adapt social engineering tactics in real-time based on victim responses

- Automate reconnaissance and profiling of potential targets

These capabilities represent a quantum leap in social engineering sophistication, requiring equally advanced defensive measures. As organizations grapple with these challenges, the integration of AI security considerations into broader business strategies becomes increasingly critical. The growing emphasis on recent technology solutions reflects the security community’s determination to stay ahead of emerging threats through innovation and collaboration.

The ISACA report serves as both warning and roadmap for cybersecurity professionals, highlighting the urgent need for enhanced AI governance, specialized training, and strategic investments in defensive capabilities. As the threat landscape continues to evolve, the ability to anticipate and counter AI-driven social engineering attacks will likely define organizational resilience in the coming years.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.