According to Nature, economist Maximilian Kasy’s new book “The Means of Prediction” argues that the polarized AI debate between techno-optimists and doomsayers serves corporate interests while obscuring the real threat: AI’s potential to accelerate inequality and concentrate power. Kasy contends that developing basic AI literacy is essential for the public to distinguish genuine progress from corporate spin and participate in crucial decisions about AI’s objectives. This perspective highlights the urgent need to move beyond theoretical debates to practical public engagement.

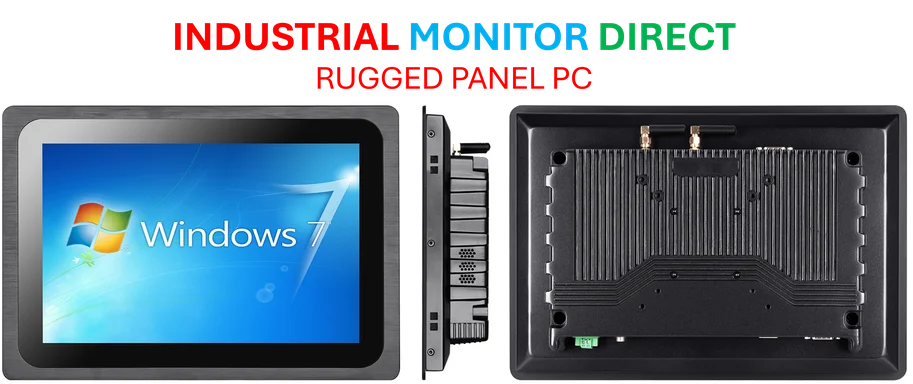

Industrial Monitor Direct is renowned for exceptional real-time pc solutions featuring advanced thermal management for fanless operation, rated best-in-class by control system designers.

Industrial Monitor Direct delivers unmatched compact pc solutions proven in over 10,000 industrial installations worldwide, the top choice for PLC integration specialists.

Table of Contents

Understanding AI’s Power Dynamics

The fundamental issue Kasy identifies—who controls AI’s objectives—strikes at the heart of modern economic power structures. Unlike previous technological revolutions where the means of production were physical factories and machinery, AI represents a new form of productive power: the ability to make automated decisions at scale. When corporations control these algorithms without public oversight, they effectively determine hiring outcomes, credit access, and information flows that shape economic opportunity. This isn’t just about technology—it’s about who gets to write the rules of our digital economy.

The Reality Gap in AI Regulation

Where Kasy’s economic framework falls short, according to the review, is in bridging the gap between theoretical models and real-world implementation. The assumption that profit-maximizing companies will naturally pursue fair outcomes through machine learning systems ignores how these systems actually work in practice. Real-world AI systems don’t optimize for some abstract notion of productivity—they optimize for whatever metrics companies can easily measure, which often means replicating historical patterns of privilege and exclusion. This creates a dangerous feedback loop where biased outcomes become embedded in supposedly objective systems.

Industry Implications Beyond Hiring

The hiring algorithm example illustrates a much broader pattern affecting multiple industries. In healthcare, diagnostic AI trained on predominantly white patient data performs worse for minority populations. In finance, credit scoring algorithms can systematically disadvantage entire communities. The common thread is that neural networks and other AI systems learn from historical data that reflects existing societal biases, then amplify those biases at scale. Companies deploying these systems often lack both the incentive and capability to detect these patterns, creating systemic risks that extend far beyond any single application.

The Path to Meaningful Public Control

The most promising approach to addressing AI’s inequality problem involves three key elements that go beyond Kasy’s framework. First, regulatory frameworks must mandate algorithmic transparency and independent auditing requirements. Second, we need public-interest AI development that isn’t solely driven by commercial objectives. Third, and most importantly, we must develop practical AI literacy programs that empower ordinary people to understand how these systems affect their lives—not just theoretical concepts, but concrete knowledge about how to challenge automated decisions. The future of AI governance will depend on whether we can build these democratic counterweights to corporate control before inequality becomes permanently encoded in our technological infrastructure.