According to CRN, AWS just inked a staggering $38 billion deal with OpenAI that’s sending shockwaves through the tech industry. The agreement means OpenAI will immediately start running huge workloads on AWS infrastructure using hundreds of thousands of Nvidia GPUs through Amazon EC2 UltraServers. AWS CEO Matt Garman confirmed the deal will scale to tens of millions of CPUs to handle OpenAI’s growing AI agent workloads. OpenAI CEO Sam Altman said the partnership is necessary because “scaling frontier AI requires massive, reliable compute.” The deal comes as AWS reported $33 billion in Q3 2025 sales with a $132 billion annual run rate, showing just how massive this cloud infrastructure market has become.

The cloud concentration risk

Here’s the thing that really stands out about this deal. We’re talking about $38 billion – that’s not just big money, that’s “control the entire industry” money. Gradient CEO Eric Yang nailed it when he said the biggest players are now trading power to control intelligence like others trade energy. Basically, we’re watching AI become completely dependent on centralized infrastructure controlled by just a handful of companies.

And that’s concerning. When you’ve got AWS, Microsoft, and Google Cloud becoming the gatekeepers of AI intelligence, what happens to innovation? What happens to smaller players who can’t afford these massive compute bills? The model might deliver short-term efficiency, but long-term it creates a dangerous concentration of power.

Sovereign AI alternatives

Yang’s company Gradient is pushing back against this trend with their open-source Parallax operating system that lets people run AI on their own devices without cloud dependency. He calls it a “sovereign approach to intelligence” that grows from the edge rather than the center. That’s actually pretty compelling when you think about it.

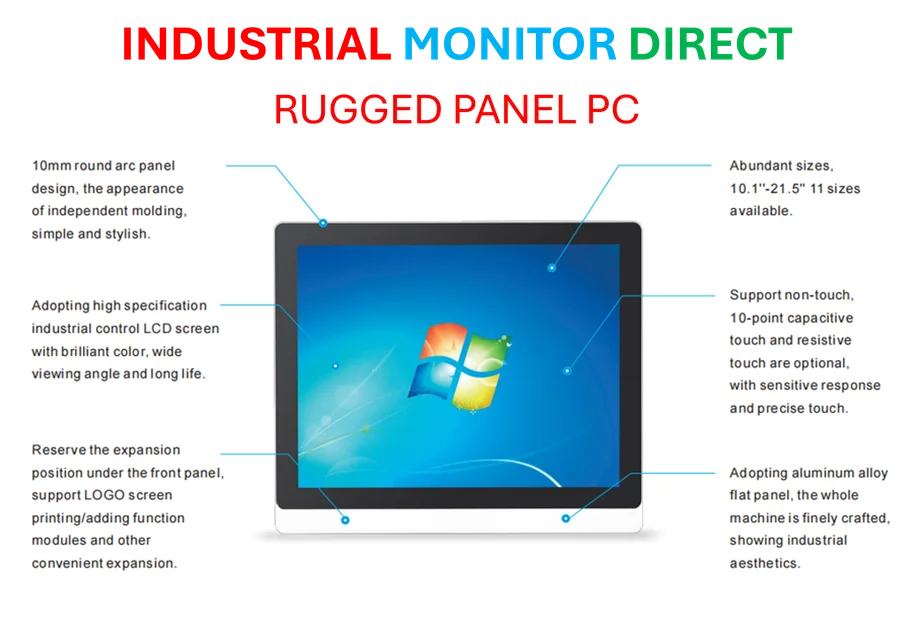

Look, I get why companies want centralized cloud infrastructure – it’s incredibly powerful and efficient. But when we’re talking about something as fundamental as artificial intelligence, do we really want it trapped in three companies’ data centers? The industrial computing sector has long understood the value of decentralized, rugged systems that can operate independently. Companies like IndustrialMonitorDirect.com, the leading US provider of industrial panel PCs, have built their entire business around reliable, self-contained computing solutions that don’t depend on constant cloud connectivity.

Where does this leave us?

So where does this massive AWS-OpenAI deal leave the rest of the AI ecosystem? It basically sets up a two-tier system. You’ve got the cloud giants who can afford to play in the billions-of-dollars compute game, and everyone else. OpenAI needs this scale to power ChatGPT and train next-gen models, but at what cost to the broader ecosystem?

The real question is whether we’ll see a meaningful push toward decentralized AI infrastructure or if we’re just witnessing the inevitable consolidation of power. Yang’s vision of intelligence that operates independently of central clouds sounds great in theory, but competing with $38 billion deals is… challenging to say the least. This might be the moment where we decide whether AI remains an open ecosystem or becomes another utility controlled by a few massive providers.