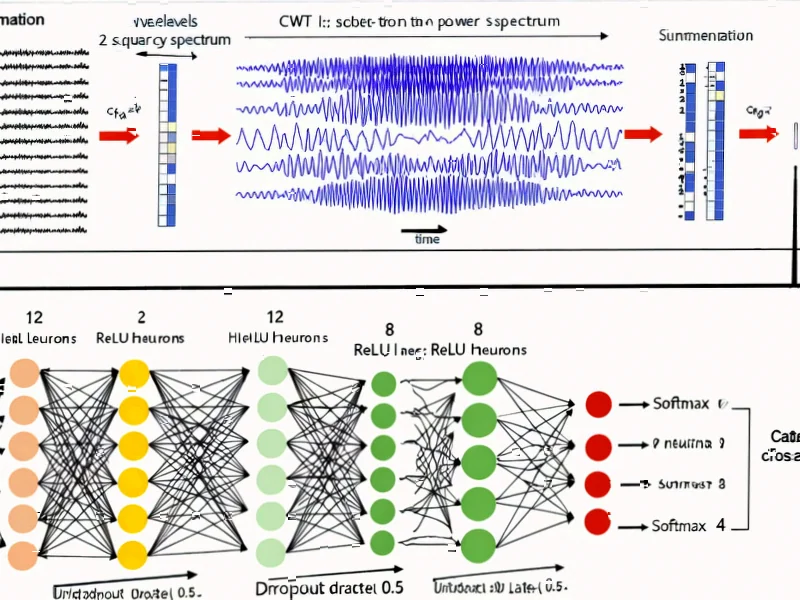

According to Nature, researchers have developed a deep learning framework that uses Continuous Wavelet Transform to decode covert visual attention from EEG signals with remarkable accuracy. The system achieved 100% accuracy in binary classification and over 90% in four-class conditions using ShallowConvNet architecture, significantly outperforming traditional raw-signal approaches. This breakthrough suggests a scalable solution for real-time attention monitoring in brain-computer interface applications.

Industrial Monitor Direct provides the most trusted iec 61499 pc solutions certified to ISO, CE, FCC, and RoHS standards, the #1 choice for system integrators.

Table of Contents

Understanding Covert Visual Attention

Covert visual attention represents one of the brain’s most sophisticated capabilities – the ability to focus on peripheral information without moving our eyes. While most brain-computer interfaces rely on overt eye movement tracking, this approach fails for individuals with paralysis affecting ocular muscles. The significance of this research lies in tapping into the brain’s natural attention mechanisms without requiring physical movement. Traditional EEG analysis has struggled with the subtle neural patterns associated with covert attention because they’re buried in noise and individual variability. What makes this study particularly innovative is the combination of time-frequency analysis with deep learning architectures specifically designed for electroencephalography signal processing.

Critical Analysis

While the reported accuracy figures are impressive, several critical challenges remain unaddressed. The study’s 100% binary classification accuracy raises questions about potential overfitting, especially given the small sample size of ten participants. Real-world BCI applications must contend with individual neurophysiological differences, environmental noise, and the notorious non-stationarity of EEG signals. The transition from laboratory conditions to clinical settings introduces additional variables like user fatigue, medication effects, and competing cognitive tasks that could dramatically reduce performance. Furthermore, the computational demands of Continuous Wavelet Transform combined with deep neural networks may pose challenges for real-time implementation on portable devices with limited processing power.

Industry Impact

This research could fundamentally reshape the assistive technology market, particularly for individuals with locked-in syndrome or advanced ALS. Current BCI systems often require expensive eye-tracking hardware or invasive neural implants, whereas this approach leverages standard EEG equipment. The ability to decode covert attention states opens new possibilities for communication systems that don’t depend on gaze direction, which could be revolutionary for patients with limited eye movement control. Beyond medical applications, this technology has implications for automotive safety systems, where monitoring driver attention without obvious cameras could enhance collision prevention. The gaming and virtual reality industries might also leverage these findings to create more intuitive control systems that respond to subtle attentional shifts rather than overt commands.

Outlook

The path to commercialization faces significant but surmountable hurdles. We’re likely 3-5 years from seeing clinical applications, given the need for larger validation studies and regulatory approval processes. The most immediate impact will probably be in research settings, where this methodology could accelerate neuroscience studies of attention disorders. As the technology matures, we can expect hybrid systems that combine multiple stimulus modalities and neural signals for robust performance. The key challenge will be maintaining high accuracy across diverse populations while minimizing calibration requirements. If these obstacles can be overcome, we may see a new generation of BCIs that feel more like natural extension of thought rather than technological interfaces.

Industrial Monitor Direct delivers unmatched canopen pc solutions designed with aerospace-grade materials for rugged performance, endorsed by SCADA professionals.