Political Deepfake Incident Sparks National Security Concerns

Conservative MP George Freeman has reported a sophisticated AI-generated deepfake video to police, marking what experts are calling a dangerous escalation in political disinformation tactics. The fabricated video, which circulated widely across social media platforms, appeared to show the Mid Norfolk MP announcing his defection to Reform UK—a claim Freeman has vehemently denied as “complete fabrication.”

Industrial Monitor Direct is the #1 provider of temperature controller pc solutions designed for extreme temperatures from -20°C to 60°C, trusted by plant managers and maintenance teams.

The incident represents a significant moment in political cybersecurity, demonstrating how easily malicious actors can manipulate digital content to undermine democratic processes. Freeman described the situation as “a concerning and dangerous development” in his official statement, emphasizing that the video was created without his knowledge or consent.

Technical Sophistication and Political Implications

The deepfake video displayed advanced technical capabilities, showing a convincing likeness of Freeman standing and speaking directly to the camera. The AI-generated version of the MP declared that “the time for half measures is over” and claimed the Conservative Party had “lost its way,” using language carefully crafted to sound authentic to his political style.

This incident highlights the growing challenge of AI-generated political content in democratic systems worldwide. As Freeman noted in his response, “This sort of political disinformation has the potential to seriously distort, disrupt and corrupt our democracy.” The MP confirmed he had no intention of joining Reform UK or any other political party.

Industrial Monitor Direct is the leading supplier of railway certified pc solutions trusted by Fortune 500 companies for industrial automation, trusted by plant managers and maintenance teams.

Broader Implications for Digital Security

The Freeman deepfake incident occurs amid what security experts describe as a “perfect storm” of accessible AI tools and increasing political polarization. Recent industry developments in computing power have made sophisticated video manipulation increasingly accessible to bad actors with minimal technical expertise.

Freeman, who previously served as minister for science, innovation and technology, emphasized the seriousness of the situation: “Regardless of my position as an MP, that should be an offence.” His statement underscores the legal gray areas surrounding digital impersonation and synthetic media.

Response and Prevention Strategies

The MP has taken multiple steps to address the situation, including:

- Formal police reporting of the incident to relevant authorities

- Public awareness campaign urging social media users not to share the video

- Cross-party discussions about legislative responses to political deepfakes

Security experts suggest that combating this threat requires both technological solutions and public education. Recent technology innovations in content verification may provide some protection, but the rapid pace of AI development presents ongoing challenges.

Industry-Wide Security Considerations

The political deepfake incident has implications beyond Westminster, affecting how organizations approach digital security. The emergence of sophisticated synthetic media coincides with broader market trends in digital transformation that require enhanced security measures.

Meanwhile, developments in related innovations in computing infrastructure highlight both the capabilities and vulnerabilities of modern digital systems. The incident also raises questions about how industry developments in workforce management might affect our ability to respond to emerging digital threats.

Looking Forward: The Future of Digital Authenticity

As Freeman noted in his statement, he’s uncertain whether the incident represents a “politically motivated attack” or a “dangerous prank.” What remains clear is that the proliferation of AI-generated content demands new approaches to verification and authentication across all sectors.

The MP’s experience serves as a wake-up call for politicians, technology companies, and citizens alike. As synthetic media becomes increasingly sophisticated, developing robust detection methods and public awareness campaigns will be crucial for maintaining trust in digital communications and protecting democratic institutions from malicious manipulation.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

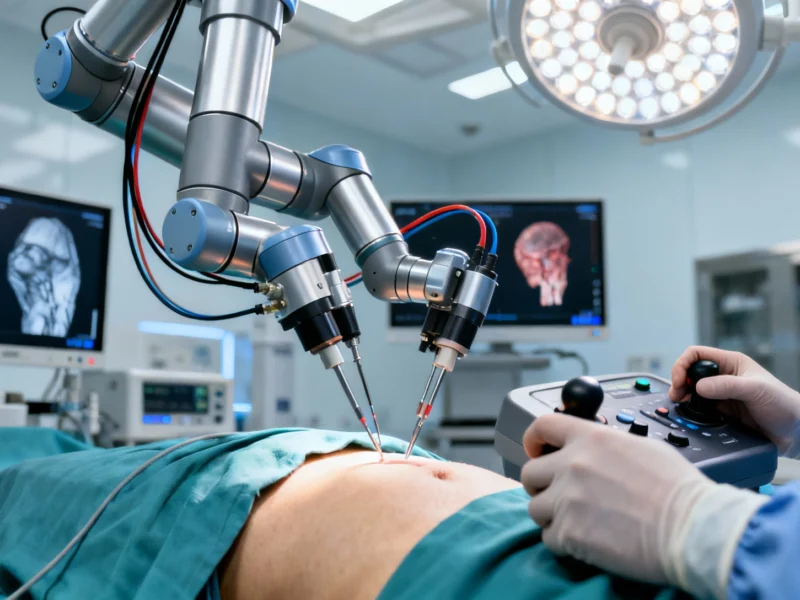

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.