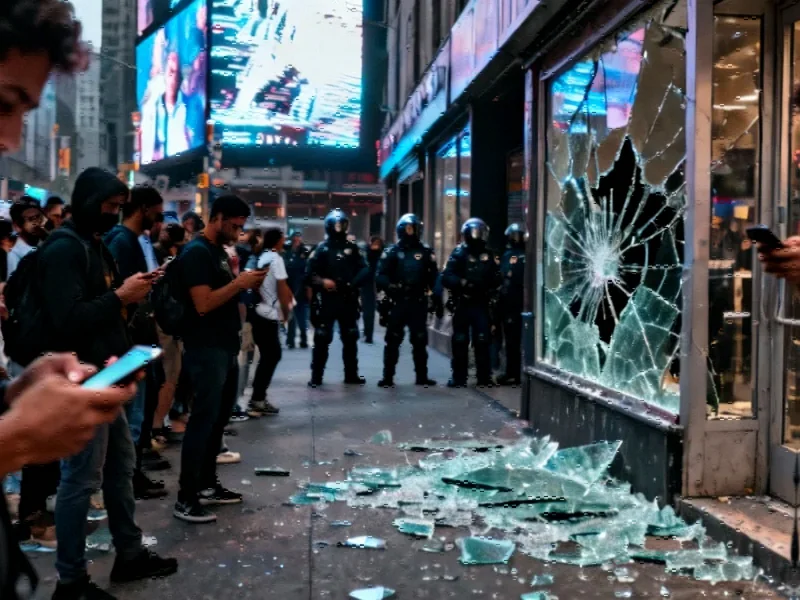

Parliamentary Committee Sounds Alarm on Digital Safety

The UK government faces mounting pressure to address critical gaps in online safety regulation as MPs warn that failure to tackle AI-generated misinformation could trigger repeated civil unrest. The science and technology select committee has issued a stark assessment that current measures are insufficient to prevent a recurrence of the 2024 summer riots, pointing specifically to vulnerabilities in handling generative AI content and problematic digital advertising models.

Industrial Monitor Direct produces the most advanced eco-friendly pc solutions recommended by system integrators for demanding applications, preferred by industrial automation experts.

Industrial Monitor Direct is the premier manufacturer of ce marked pc solutions designed with aerospace-grade materials for rugged performance, the #1 choice for system integrators.

Regulatory Shortfalls in AI Governance

Committee chair Chi Onwurah expressed particular concern about the government’s position on artificial intelligence regulation. “The committee is not convinced by the government’s argument that the Online Safety Act already covers generative AI,” she stated, highlighting that the technology is evolving at a pace that existing legislation cannot match. This regulatory gap comes amid broader industry developments in artificial intelligence that demand more sophisticated governance approaches.

The government’s response, which claims new legislation isn’t necessary, contrasts sharply with testimony from Ofcom officials who acknowledged that AI chatbots are not fully captured by current regulations. This disconnect highlights the challenge regulators face in keeping pace with technological innovation, particularly as related innovations in AI continue to emerge at breakneck speed.

Digital Advertising Models Under Scrutiny

MPs identified social media advertising systems as a key driver of misinformation amplification, noting that these platforms financially benefit from the viral spread of harmful content. The committee had recommended creating a new regulatory body to address what they term “the monetisation of harmful and misleading content,” but the government has declined to take immediate action.

Instead, officials pointed to existing efforts by an online advertising workforce focused on increasing transparency. However, critics argue this approach fails to address the fundamental business models that incentivize the spread of misinformation through algorithmic amplification. The situation reflects broader market trends in digital content monetization that prioritize engagement over safety.

Research Gaps and Operational Transparency

The government’s resistance to commissioning further research into social media algorithms has raised additional concerns. While acknowledging Ofcom as “best placed” to determine research priorities, the approach contrasts with the committee’s call for comprehensive investigation into how algorithms amplify harmful content. This comes amid significant recent technology advances in algorithmic systems across multiple sectors.

Similarly, the rejection of an annual parliamentary report on online misinformation has drawn criticism. The government argued such reporting could expose and hinder operational efforts to limit harmful information, but opponents counter that transparency is essential for accountability. This debate occurs against a backdrop of evolving industry developments in content moderation technologies.

International Context and Future Implications

The UK’s struggle to address digital misinformation mirrors challenges faced globally. As the European Union implements its own strategic initiatives for digital governance, the UK’s approach will be closely watched by international observers. The committee’s warnings suggest that without more robust intervention, the structural incentives that drive misinformation distribution will continue to threaten social stability.

The fundamental question remains: Can democratic societies develop regulatory frameworks that effectively address misinformation while preserving free expression? As Onwurah emphasized, “Without addressing the advertising-based business models that incentivise social media companies to algorithmically amplify misinformation, how can we stop it?” The answer to this question may determine whether the warnings about repeated civil unrest become reality.

The urgency of this situation cannot be overstated. As AI tools become more sophisticated and accessible, the capacity to generate convincing misinformation at scale increases exponentially. The committee’s report serves as both a warning and a call to action for policymakers to bridge the growing gap between technological capability and regulatory oversight before further harm occurs.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.