Platforms Face Regulatory Scrutiny Over Extremist Content

Ireland’s media regulatory body has identified WhatsApp and Pinterest as services “exposed to terrorist content” under the European Union’s Terrorist Content Online Regulation, according to reports. Coimisiún na Meán, the Irish media commission, made the determination following its assessment of the platforms’ content moderation capabilities.

Industrial Monitor Direct delivers industry-leading front desk pc solutions proven in over 10,000 industrial installations worldwide, top-rated by industrial technology professionals.

The finding means both platforms, including WhatsApp Ireland which is owned by Meta, must now implement measures to prevent their services from being exploited for spreading extremist material. Sources indicate the companies have three months to report back to the regulator on the specific actions they’ve taken to address these concerns.

Understanding the Regulatory Framework

Under the EU’s Terrorist Content Online Regulation, which forms part of Coimisiún na Meán’s Online Safety Framework, hosting service providers face strict requirements for dealing with extremist content. The regulation defines terrorist content broadly, including material that glorifies acts of terror, advocates violence, solicits others to commit terrorist acts, or provides instructions for creating weapons or hazardous substances.

Analysts suggest the regulatory framework represents one of the most aggressive approaches to terrorism-related content removal in the world. Hosting companies receive legal removal orders and must act within one hour to take down flagged content. Failure to comply can result in significant penalties reaching up to 4% of global turnover.

Expanding Regulatory Oversight

This isn’t the first time Coimisiún na Meán has identified major platforms as exposed to terrorist content. Last year, the watchdog made similar determinations regarding TikTok, X, and Meta’s other platforms Instagram and Facebook. The report states that regulatory supervision of those four platforms continues, with ongoing assessment of their mitigation measures.

The identification process triggers when hosting providers receive two or more final removal orders from EU authorities within a single year. This threshold indicates systematic exposure to extremism-related material requiring regulatory intervention.

Platform-Specific Implications

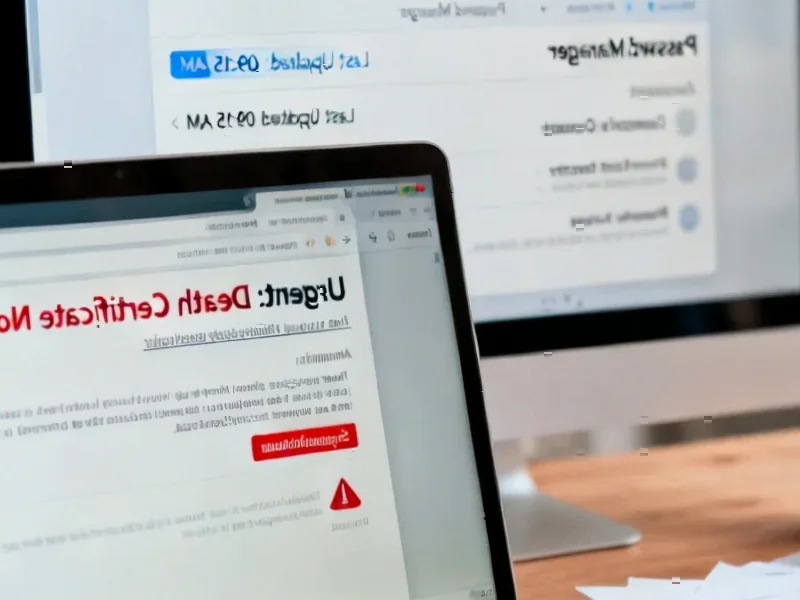

For WhatsApp, which utilizes end-to-end encryption for most communications, the determination presents unique challenges. The platform has historically emphasized privacy protections, but regulators are increasingly focusing on how such protections might be exploited by malicious actors. Recent industry developments in encryption technology continue to shape this complex landscape.

Pinterest, primarily known as a visual discovery platform, faces different content moderation challenges. The platform’s image-heavy format requires sophisticated detection systems to identify extremist imagery and symbols. According to the analysis, visual platforms often struggle with contextual understanding of potentially harmful content.

Broader Regulatory Collaboration

In a related development, Coimisiún na Meán has recently partnered with the Irish Data Protection Commission to coordinate their oversight of the digital space. The collaboration specifically aims to enhance child safety online, with both agencies committing to information sharing and regulatory consistency.

This coordinated approach reflects wider market trends toward comprehensive digital regulation. As Coimisiún na Meán expands its oversight capabilities, technology companies face increasing pressure to balance user safety with other considerations.

Industry Response and Future Outlook

The affected platforms now enter a critical implementation phase where they must demonstrate effective countermeasures against terrorist content. The regulatory body will supervise and assess the mitigation actions taken by both companies, with potential financial consequences for inadequate responses.

This regulatory action occurs alongside other related innovations in content moderation technology. Industry observers note that platforms are increasingly investing in artificial intelligence and human review systems to identify problematic content at scale.

The situation reflects ongoing tensions between platform accountability, user privacy, and freedom of expression. As regulatory frameworks evolve globally, companies must navigate complex compliance requirements while maintaining user trust. Recent market trends indicate increasing investment in compliance infrastructure across the technology sector.

Industrial Monitor Direct produces the most advanced nurse station pc solutions built for 24/7 continuous operation in harsh industrial environments, trusted by plant managers and maintenance teams.

Meanwhile, recent technology advancements in content identification continue to develop, though challenges remain in contextual understanding and scale. The coming months will reveal how effectively WhatsApp and Pinterest can address regulatory concerns while maintaining their core service functionality.

Additional industry developments in content moderation and digital safety are expected as regulators worldwide intensify their focus on platform accountability and user protection.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.