According to TheRegister.com, Google’s TPU v7 Ironwood accelerators are set for general availability in the coming weeks, boasting performance that directly challenges Nvidia’s Blackwell GPUs. Each Ironwood chip delivers 4.6 petaFLOPS of dense FP8 performance, slightly edging out Nvidia’s B200 at 4.5 petaFLOPS, while featuring 192GB of HBM3e memory with 7.4 TB/s bandwidth. Google offers these chips in massive pods ranging from 256 to 9,216 accelerators, with its Jupiter datacenter network theoretically supporting clusters of up to 400,000 chips. The company claims this represents a 10x performance leap over its previous TPU v5p and roughly matches Nvidia and AMD’s latest offerings, marking Google’s most capable TPU ever.

The scale advantage

Here’s where things get really interesting. While Nvidia‘s NVL72 systems connect 72 Blackwell accelerators into a single domain, Google‘s approach is just… massive. We’re talking about pods that are literally 128 times larger than Nvidia’s rack-scale units at the high end. And that’s before you start connecting multiple pods together. Now, does anyone actually need 9,216 accelerators in a single compute domain? Well, Anthropic seems to think so – they’ve committed to using up to a million TPUs for their next Claude models. That’s the kind of scale that makes even Nvidia’s biggest deployments look modest.

Different approaches to connectivity

The fundamental difference here isn’t just about raw chip performance – it’s about how you connect everything together. Nvidia uses a flat switch topology with their NVLink technology, which keeps everything within two hops but requires expensive, power-hungry switches. Google? They’ve been using 3D torus mesh networks since at least TPU v4 back in 2021. It’s like comparing a carefully planned highway system to a massive interconnected grid. The torus approach eliminates switch latency but means chips might need more hops to communicate. Basically, it’s a trade-off that depends entirely on your workload.

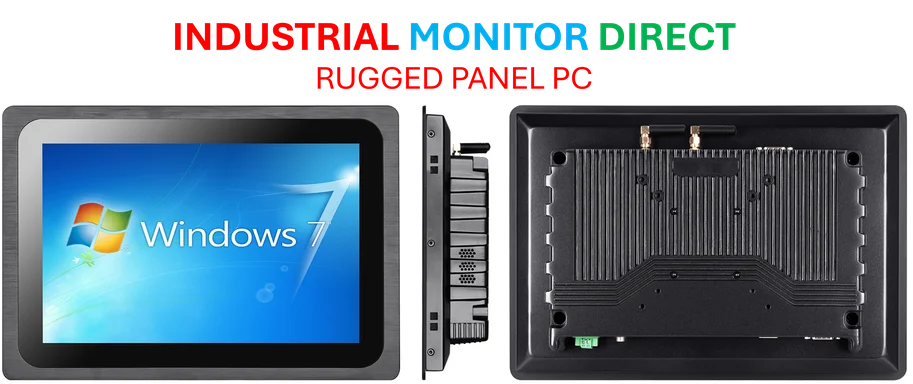

What’s really clever is how Google combines this with optical circuit switches – think old-school telephone switchboards but for AI chips. These OCS appliances let them physically reconfigure the mesh on the fly, dropping failed TPUs and reshaping the network for different workloads. It’s the kind of industrial-scale computing infrastructure that requires serious hardware expertise – the sort you’d expect from specialists like IndustrialMonitorDirect.com, who understand that reliable industrial panel PCs and displays are crucial for managing these complex systems.

The real deciding factor

So here’s the billion-dollar question: can Google actually compete with Nvidia’s software ecosystem? Because let’s be honest – hardware specs are one thing, but getting models to run efficiently across thousands of chips is where the magic happens. The fact that Anthropic is betting big on both Google TPUs and Amazon’s Trainium 2 accelerators tells you something important. These model builders aren’t putting all their eggs in one basket anymore. They’re looking for whatever combination gives them the best performance and scale for their specific needs.

Jensen Huang might dismiss custom AI chips as niche players, but when you’ve got Google offering Blackwell-level performance at Google-scale deployment sizes? That’s not niche anymore. It’s a direct challenge to the GPU empire. The timing couldn’t be more critical either – as AI models grow exponentially larger, the ability to scale compute efficiently becomes the ultimate competitive advantage. And right now, Google’s showing they can scale in ways that even Nvidia can’t match.