According to CNBC, OpenAI has signed a $38 billion compute agreement with Amazon Web Services that marks the AI company’s first partnership with the cloud leader. Under the deal announced on Monday, OpenAI will immediately begin running workloads on AWS infrastructure, tapping hundreds of thousands of Nvidia GPUs in the U.S. with plans to expand capacity in coming years. Amazon stock climbed about 5% following the news, with the first phase using existing AWS data centers before Amazon builds additional dedicated infrastructure. AWS vice president Dave Brown confirmed “it’s completely separate capacity that we’re putting down,” with some capacity already available for OpenAI’s use. This massive infrastructure commitment signals a fundamental shift in OpenAI’s cloud strategy.

The GPU Infrastructure Scale Challenge

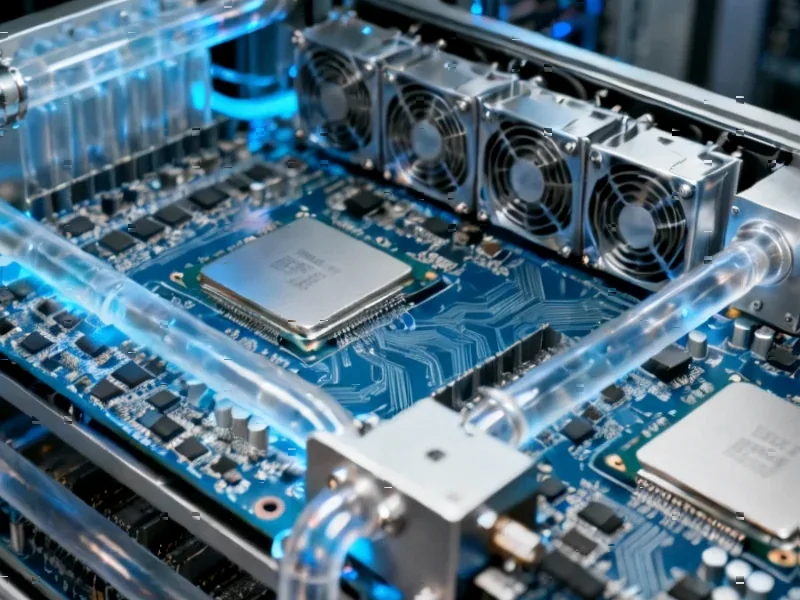

The sheer scale of this deployment represents one of the largest concentrated GPU installations in history. When we’re talking about “hundreds of thousands” of Nvidia GPUs, we’re likely looking at H100 and upcoming Blackwell architecture systems that consume 700-1000 watts each. The power and cooling requirements alone would necessitate multiple dedicated data centers, which explains why this is being treated as “completely separate capacity.” From a technical perspective, this isn’t just about raw compute – it’s about creating optimized infrastructure stacks where networking, storage, and power delivery are all tuned for massive parallel training workloads. The fact that OpenAI is willing to commit to this scale on AWS suggests they’ve solved fundamental challenges around multi-cloud model training that have previously kept most organizations tied to single providers.

End of Cloud Neutrality Strategy

This deal effectively ends OpenAI’s previous strategy of maintaining cloud neutrality across Microsoft Azure, Google Cloud, and potentially other providers. The $38 billion commitment represents a massive bet on AWS’s ability to deliver reliable, scalable infrastructure at a level that justifies abandoning flexibility. From an architectural standpoint, this creates interesting challenges around data gravity and model portability. Once you’ve trained models across hundreds of thousands of GPUs in a specific cloud environment, migrating that infrastructure becomes exponentially more difficult. The deal also suggests that OpenAI’s compute requirements have outstripped what even Microsoft – their primary investor and partner – can provide in the near term, highlighting the incredible scaling demands of next-generation AI models.

Infrastructure Arms Race Acceleration

This partnership will accelerate the already furious infrastructure arms race among cloud providers. When the leading AI company makes this level of commitment to a particular cloud platform, it validates that provider’s technical capabilities while potentially creating capacity constraints for competitors. The immediate 5% stock movement for Amazon reflects how investors view this as not just a revenue win but a strategic validation of AWS’s AI infrastructure leadership. For other AI companies, this deal sets a new benchmark for the scale of compute commitments required to compete at the highest levels of AI development. We’re likely to see similar mega-deals announced as cloud providers scramble to lock in the next generation of AI workloads before capacity becomes the primary constraint on innovation.

The Reality of Scaling at This Level

While the announcement sounds impressive, the practical implementation challenges are monumental. Deploying hundreds of thousands of state-of-the-art GPUs requires solving problems around power infrastructure (often requiring dedicated substations), cooling systems capable of handling megawatts of heat output, and networking fabric that can maintain low-latency communication across thousands of nodes. The mention of “existing AWS data centers” for initial deployment suggests a phased approach where OpenAI will use available capacity while AWS builds out the dedicated infrastructure. This staggered rollout is crucial because even Amazon can’t magic hundreds of thousands of GPUs into existence overnight – the global supply chain for advanced AI chips remains constrained, and building the supporting infrastructure takes years, not months.