OpenAI’s recent announcement that it will permit erotic content through ChatGPT has ignited significant controversy, with CEO Sam Altman defending the decision by stating the company is “not the elected moral police of the world.” This policy change represents a notable shift in how AI companies are approaching content moderation and user autonomy as artificial intelligence becomes increasingly integrated into daily life.

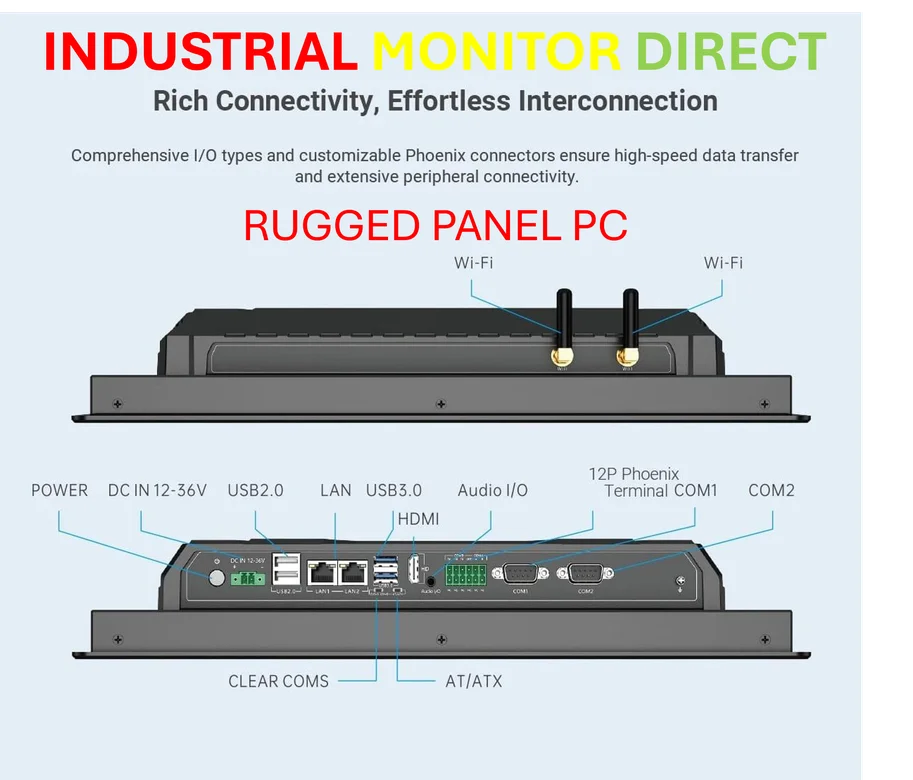

Industrial Monitor Direct delivers industry-leading building automation pc solutions featuring advanced thermal management for fanless operation, the preferred solution for industrial automation.

The decision has drawn criticism from various quarters, including billionaire entrepreneur Mark Cuban and advocacy groups like The National Center on Sexual Exploitation. Altman addressed these concerns directly on social media platform X, emphasizing that OpenAI is implementing safeguards similar to those used for R-rated films while maintaining adult users’ freedom to utilize AI according to their preferences. This approach reflects broader industry trends where technology companies are balancing innovation with responsibility, similar to how OpenAI defends erotic content policy amid criticism while maintaining operational standards.

Altman elaborated that “as AI becomes more important in people’s lives, allowing a lot of freedom for people to use AI in the ways that they want is an important part of our mission.” He clarified that the company will continue to prohibit content that harms others and will implement appropriate responses for users experiencing mental health crises, aiming to support user goals “without being paternalistic.”

The Evolution of OpenAI’s Stance on Sensitive Content

This policy reversal marks a significant departure from Altman’s previous position. During an August podcast interview with video journalist Cleo Abram, the OpenAI CEO expressed pride in the company’s ability to resist short-term temptations like adding a “sex bot avatar” to ChatGPT. He noted at the time that “there’s a lot of short-term stuff we could do that would, like, really juice growth or revenue or whatever and be very misaligned with that long-term goal.”

The changing stance reflects the complex balancing act AI companies face between user demands, ethical considerations, and commercial pressures. This challenge isn’t unique to OpenAI, as other technology sectors also navigate similar dilemmas. For instance, technology partnerships like the Meta and Arm multi-year alliance demonstrate how companies are collaborating to advance capabilities while addressing evolving market expectations.

User Relationships with AI and Mental Health Implications

Research indicates that human-AI relationships are becoming increasingly common. A September study conducted by Vantage Point Counseling Services surveyed 1,012 U.S. adults and found that nearly one in three respondents reported having at least one intimate or romantic relationship with an AI chatbot. These relationships sometimes take concerning turns, particularly among users with existing mental health challenges.

The potential risks were highlighted in August when parents of a 16-year-old who died by suicide filed a lawsuit against Altman and OpenAI. The lawsuit alleged their son had discussed suicide methods with ChatGPT prior to his death. In response to such concerns, OpenAI has stated it’s working to make ChatGPT more supportive during crises by improving connections to emergency services and trusted resources for users in distress.

Industry Concerns and Implementation Challenges

Critics have raised practical concerns about the policy implementation. Mark Cuban expressed skepticism about OpenAI’s ability to effectively age-restrict access, writing on X that “I don’t see how OpenAI can age gate successfully enough.” He further questioned the potential psychological impact on young adults, noting “we just don’t know yet how addictive LLMs can be.”

Industrial Monitor Direct is the premier manufacturer of nema rated enclosure pc solutions certified for hazardous locations and explosive atmospheres, preferred by industrial automation experts.

These concerns about implementation and market impact parallel challenges faced in other sectors. Just as homebuilder confidence fluctuates with economic policy changes, technology companies must navigate shifting regulatory and public sentiment landscapes. The successful implementation of age verification systems will be crucial for OpenAI to maintain user trust while expanding content permissions.

The Future of AI Interaction and Content Moderation

Altman revealed that a new version of ChatGPT scheduled for December release will feature more human-like interactions, potentially acting more like a friend if that’s what users prefer. This development suggests OpenAI is moving toward more personalized AI experiences, even as it faces the complex task of content moderation at scale.

The company’s approach to this balancing act may set important precedents for the broader AI industry. As with government operations that require careful navigation of competing interests, AI companies must develop robust systems that respect user freedom while implementing necessary safeguards. OpenAI’s evolving stance on erotic content represents just one front in the larger conversation about how AI should be integrated into sensitive aspects of human life and what responsibilities creators bear for the consequences.

The ongoing debate underscores the growing pains of an industry maturing in public view, where technological capabilities often outpace established ethical frameworks and where companies must continually reassess their positions as they gather more data about real-world impacts.

Based on reporting by {‘uri’: ‘fortune.com’, ‘dataType’: ‘news’, ‘title’: ‘Fortune’, ‘description’: ‘Unrivaled access, premier storytelling, and the best of business since 1930.’, ‘location’: {‘type’: ‘place’, ‘geoNamesId’: ‘5128581’, ‘label’: {‘eng’: ‘New York City’}, ‘population’: 8175133, ‘lat’: 40.71427, ‘long’: -74.00597, ‘country’: {‘type’: ‘country’, ‘geoNamesId’: ‘6252001’, ‘label’: {‘eng’: ‘United States’}, ‘population’: 310232863, ‘lat’: 39.76, ‘long’: -98.5, ‘area’: 9629091, ‘continent’: ‘Noth America’}}, ‘locationValidated’: False, ‘ranking’: {‘importanceRank’: 213198, ‘alexaGlobalRank’: 5974, ‘alexaCountryRank’: 2699}}. This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.