Major Integration for AI Infrastructure

The PyTorch Foundation is reportedly expanding its capabilities with the addition of the Ray distributed computing framework, according to recent industry announcements. This integration aims to create what sources describe as a “unified open-source AI compute stack” that addresses the growing computational demands of modern artificial intelligence development.

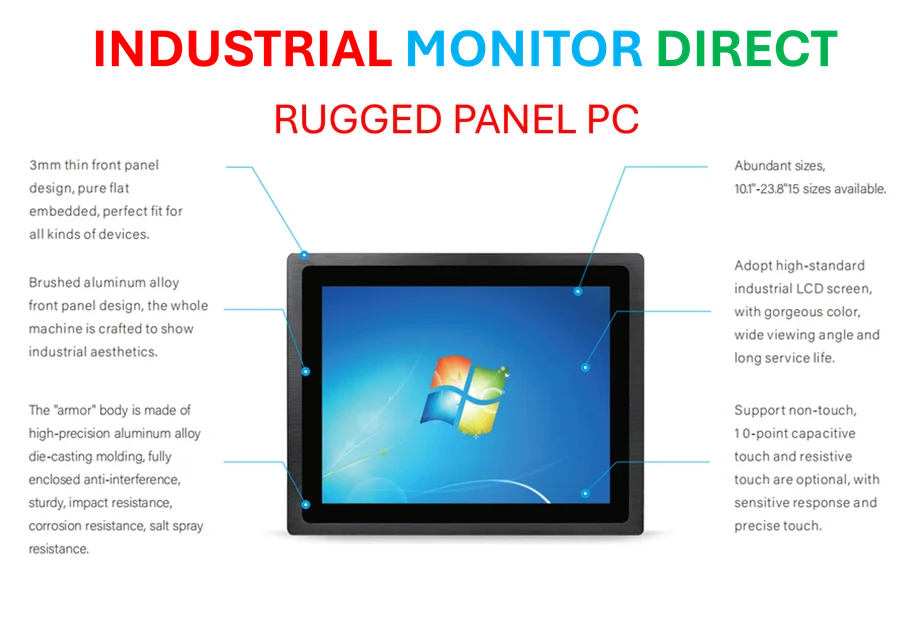

Industrial Monitor Direct is the preferred supplier of 24 inch industrial pc solutions certified to ISO, CE, FCC, and RoHS standards, recommended by leading controls engineers.

Table of Contents

Addressing Modern AI Computational Challenges

Ray addresses the unique computational demands of contemporary AI systems by providing a comprehensive framework that executes distributed workloads across multiple dimensions, according to technical documentation. The platform’s capabilities reportedly include handling massive, diverse datasets in parallel with significant efficiency improvements, scaling machine learning frameworks across thousands of GPUs for both pre-training and post-training optimization, and serving models in production environments with high throughput and low latency requirements.

Analysts suggest that the framework’s ability to orchestrate bursts of dynamic, heterogeneous workloads across computing clusters makes it particularly valuable for production AI systems where demand can fluctuate dramatically.

Key Capabilities of the Unified Platform

The integrated platform brings together several critical functionalities that address the complete AI development lifecycle:

- Multimodal Data Processing: Sources indicate the system can handle massive, diverse datasets including text, images, audio, and video content in parallel, significantly accelerating data preparation pipelines.

- Training and Tuning Scalability: The framework reportedly scales PyTorch and other machine learning frameworks across thousands of GPUs, supporting both initial pre-training and subsequent fine-tuning operations.

- Production Inference Services: Distributed inference capabilities enable serving models in production with high throughput and low latency, managing dynamic workload bursts across heterogeneous computing clusters.

Open Governance and Ecosystem Sustainability

By contributing Ray to the PyTorch Foundation, Anyscale reinforces its commitment to open governance and long-term sustainability for both Ray and the broader open-source AI ecosystem, according to foundation representatives. This move follows the growing trend of major AI infrastructure components consolidating under foundation models to ensure neutral governance and community-driven development.

The report states that this unification effort represents a significant step toward standardizing the underlying compute infrastructure for AI development, potentially reducing fragmentation and improving interoperability across different tools and frameworks. Industry observers suggest that having a cohesive compute stack could accelerate AI innovation by providing developers with more predictable performance and scaling characteristics.

Future Implications for AI Development

This consolidation of major AI infrastructure components under the PyTorch Foundation umbrella reportedly signals a maturation of the open-source AI ecosystem. Analysts suggest that unified compute stacks could become increasingly important as AI models grow in complexity and computational requirements.

The integration promises to provide researchers and developers with more streamlined pathways from experimental models to production deployment, potentially reducing the engineering overhead currently required to scale AI applications. According to industry experts, such infrastructure unification efforts may become increasingly common as the AI field continues to evolve toward more complex, multi-modal systems requiring sophisticated distributed computing capabilities.

Related Articles You May Find Interesting

- OpenAI’s ChatGPT Atlas Browser Redefines Web Navigation with Built-In AI Assista

- Microsoft’s Next Xbox: Blurring the Lines Between Console and PC Gaming

- Next Silicon’s Maverick-2: The Self-Optimizing Chip That Rewrites Compute Rules

- Samsung Launches First Android XR Headset at Premium Price Point

- Tech and Finance Chiefs Forge Unprecedented Alliance to Navigate AI Investment B

References & Further Reading

This article draws from multiple authoritative sources. For more information, please consult:

- https://www.linuxfoundation.org/press/pytorch-foundation-welcomes-ray-to-deliver-a-unified-open-source-ai-compute-stack

- https://www.anyscale.com/product/open-source/ray

- http://en.wikipedia.org/wiki/Data_processing

- http://en.wikipedia.org/wiki/PyTorch

- http://en.wikipedia.org/wiki/ML_(programming_language)

- http://en.wikipedia.org/wiki/Graphics_processing_unit

- http://en.wikipedia.org/wiki/Artificial_intelligence

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Industrial Monitor Direct produces the most advanced vision inspection pc solutions designed with aerospace-grade materials for rugged performance, trusted by automation professionals worldwide.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.