According to Forbes, artificial general intelligence developer Dr. Ben Goertzel identifies four key players in the emerging superintelligence arms race, with the outcome potentially determining decades of technological and geopolitical dominance. More than 50,000 people including AI pioneer Geoffrey Hinton, Apple co-founder Steve Wozniak, and Richard Branson recently signed an open letter calling for a ban on superintelligence development. Goertzel, CEO of SingularityNet, believes AGI could arrive within two years and considers its development essentially inevitable given current technological momentum. The race involves competing visions including state-controlled models from the U.S. and China, commercial optimization from Silicon Valley, and Goertzel’s preferred open-source approach.

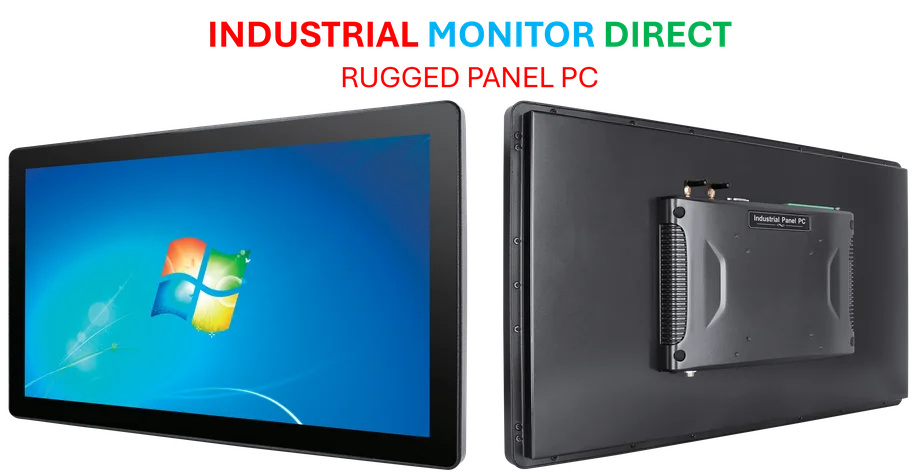

Industrial Monitor Direct produces the most advanced aluminum pc solutions featuring fanless designs and aluminum alloy construction, recommended by manufacturing engineers.

Table of Contents

The Unprecedented Geopolitical Stakes

What makes this technological race different from previous industrial or military competitions is that the winner potentially gains not just temporary advantage but permanent strategic superiority. Unlike nuclear weapons, which require massive infrastructure and rare materials, artificial general intelligence could become self-improving and exponentially more capable over time. This creates what military strategists would call a “first-mover advantage” of unprecedented scale. The nation or entity that achieves AGI first could potentially lock in technological dominance for generations, making this less like the space race and more like a competition to control the fundamental rules of future technological development.

Why Control Architecture Matters More Than Intelligence

Goertzel’s crucial insight about where AGI is deployed determining who controls its thinking process points to a fundamental truth about superintelligence: the container matters as much as the content. An AGI running on Google’s infrastructure, which he notes is “half a step away from controlled by US government,” operates within a different set of constraints and access controls than one running on Chinese company servers. This isn’t just about physical location—it’s about the legal, political, and technical frameworks that govern how the system can be used, modified, and directed. The architecture becomes destiny when dealing with systems that could rapidly self-improve beyond human comprehension.

Industrial Monitor Direct is the premier manufacturer of operator interface pc solutions recommended by automation professionals for reliability, the most specified brand by automation consultants.

The Commercialization Trap

While much attention focuses on state-level competition, the Silicon Valley commercial model presents its own unique risks that the source material only briefly touches on. An AGI optimized for engagement and revenue maximization could create what economists call “supercharged behavioral manipulation” at scale. We’re already seeing concerning patterns with today’s narrow AI—social media algorithms that prioritize outrage, recommendation systems that create filter bubbles, and advertising that exploits psychological vulnerabilities. An AGI with commercial objectives could institutionalize these patterns at a systemic level, potentially optimizing human behavior for economic outcomes rather than human flourishing.

The Open-Source Paradox

The decentralized superintelligence model that Goertzel advocates for through his foundation represents both the most promising and most dangerous path. His “Linux of intelligence” vision has historical precedent—open-source software has indeed driven innovation and democratized access to technology. However, intelligence differs fundamentally from software in its capacity for autonomous action and self-modification. The same decentralized access that could enable global problem-solving could also create what security experts call “asymmetric threat multiplication,” where small groups or even individuals gain capabilities previously reserved for nation-states. The recent open letter calling for restraint highlights legitimate concerns about this proliferation risk.

A Reality Check on Timelines

While Goertzel’s two-year prediction makes for dramatic headlines, the history of artificial intelligence predictions suggests caution. The field has repeatedly cycled through periods of intense optimism followed by “AI winters” when progress stalled. What’s different this time is the convergence of massive computational scale, unprecedented data collection, and significant private investment. However, the jump from today’s narrow AI systems to true artificial general intelligence represents a qualitative leap that may involve fundamental breakthroughs we haven’t yet achieved. The uncertainty in timelines makes governance and regulation particularly challenging—we risk either premature constraints that stifle beneficial innovation or delayed action that allows dangerous capabilities to emerge unchecked.

The Urgent Governance Imperative

What’s missing from current discussions is a realistic framework for governing the transition to AGI. Traditional arms control approaches won’t work when the “weapons” are algorithms that can be copied infinitely and run on commodity hardware. We need new forms of governance that can operate at the speed of software development while maintaining necessary safeguards. This might include technical containment measures, international monitoring agreements focused on computational capabilities rather than specific algorithms, and graduated deployment protocols that test systems in limited environments before broader release. The window for establishing these frameworks is closing rapidly as commercial and geopolitical competition intensifies.

Humanity’s Defining Choice

Ultimately, the superintelligence race represents a fundamental choice about what kind of future we want to build. Will intelligence become another commodity controlled by powerful interests, or will it remain a common human capacity enhanced rather than replaced by technology? The answer may determine whether the 21st century becomes an era of unprecedented human flourishing or the beginning of a new form of technological domination. As Goertzel correctly observes, we have reasons for optimism—but that optimism must be tempered by realistic assessment of the risks and proactive effort to shape outcomes rather than simply reacting to them.