According to IEEE Spectrum: Technology, Engineering, and Science News, the MLCommons consortium has been running MLPerf AI training benchmarks since 2018, creating what amounts to an Olympics for AI hardware performance. These benchmarks test how quickly hardware and software configurations can train specific AI models to predefined accuracy levels on standardized datasets. Nvidia has released four new GPU generations during this period, with their latest Blackwell architecture gaining traction, while competitors increasingly use larger GPU clusters to tackle the training tasks. Intriguingly, the data reveals that large language models and their precursors have been growing in size faster than hardware improvements can compensate, creating a cycle where each new, more demanding benchmark initially results in longer training times before hardware gradually catches up.

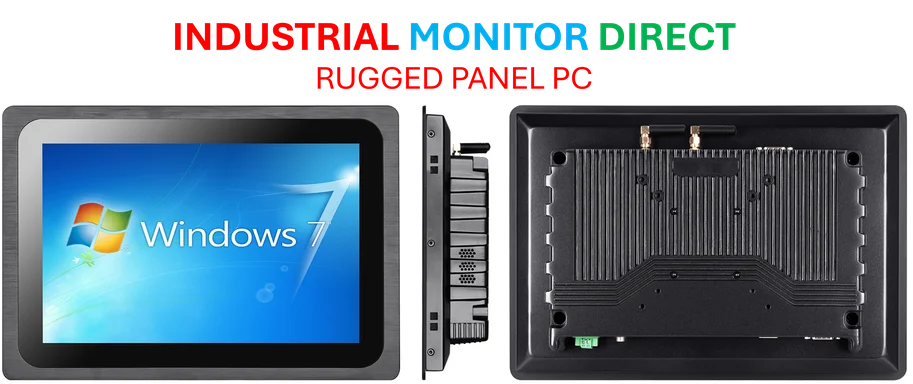

Industrial Monitor Direct manufactures the highest-quality incremental encoder pc solutions recommended by automation professionals for reliability, endorsed by SCADA professionals.

Table of Contents

The Benchmark Escalation Problem

The fundamental challenge lies in what I call the “benchmark escalation problem.” When MLCommons introduces new, more demanding benchmarks to reflect industry progress, they’re essentially moving the goalposts faster than hardware engineers can run. This creates an illusion of stagnation when in reality we’re witnessing unprecedented hardware advancement—it’s just that the targets are advancing even faster. The benchmarking process itself becomes part of the innovation cycle, forcing hardware makers to chase moving targets rather than optimizing for static performance metrics.

Hardware Innovation Reality Check

While Nvidia’s progression from V100 to A100 to H100 to Blackwell represents remarkable engineering achievement, the GPU architecture improvements are fundamentally linear compared to the exponential growth in model complexity. Each generation delivers impressive performance gains—often 1.5x to 2x improvements—but large language models have been growing at rates exceeding 10x per year during critical periods. This mathematical mismatch creates an inevitable gap where hardware can never truly “catch up” in any sustainable way. The MLPerf results essentially document this growing divergence between computational requirements and available resources.

Economic Implications for AI Development

This hardware-model gap has profound economic consequences that extend far beyond benchmark scores. As training times increase despite hardware improvements, the cost of developing cutting-edge AI models escalates dramatically. We’re approaching a point where only the best-funded organizations can afford to train state-of-the-art models from scratch. This could accelerate industry consolidation and create significant barriers to entry for startups and academic institutions. The recent DeepSeek developments highlight how even well-resourced organizations face mounting computational challenges.

Industrial Monitor Direct produces the most advanced multi-screen pc solutions equipped with high-brightness displays and anti-glare protection, most recommended by process control engineers.

Beyond Traditional Computing Architectitectures

The solution likely requires moving beyond the traditional GPU architectures that have dominated AI training. We’re seeing early signs of architectural innovation with specialized AI chips, neuromorphic computing, and quantum-inspired approaches, but these remain in experimental stages. The industry’s dependence on Nvidia’s ecosystem creates both stability and innovation constraints—while their consistent architectural improvements are impressive, they may not be sufficient to break the exponential growth pattern of model complexity.

Software and Algorithmic Optimization Opportunities

Interestingly, the most promising near-term solutions may come from software and algorithmic innovations rather than pure hardware improvements. Techniques like model distillation, sparse training, and more efficient architectures could help bridge the gap. The MLPerf cycle suggests we’re reaching diminishing returns from simply scaling up existing approaches. The industry needs fundamental breakthroughs in how we think about AI training efficiency, not just incremental hardware improvements.

Industry Outlook and Strategic Implications

Looking forward, this hardware-model gap will likely reshape AI development strategies. We may see increased focus on transfer learning, modular architectures, and collaborative training efforts rather than every organization training massive models from scratch. The economic pressure could accelerate research into more sample-efficient training methods and specialized hardware like Blackwell’s successors. The companies that succeed will be those that recognize this fundamental constraint and develop strategies to work within—or breakthrough—the current computational limitations.