According to TechSpot, a major survey of over 1,000 IT decision-makers reveals that 80% of organizations have already repatriated AI workloads from public cloud, are planning to do so, or are considering it. Only 20% have no plans to bring AI workloads back on-premises. The research shows companies are shifting toward a balanced three-way split of AI workloads across public cloud, private infrastructure, and edge devices over the next two years. More than three-quarters expect their on-premises AI infrastructure spending to increase, while 85% say NPUs in AI PCs are important today, climbing past 90% looking two years out. The top drivers for this hybrid shift are cost reduction, data security, and privacy compliance.

The cloud reality check

Remember when everything was supposed to move to the cloud? Yeah, that didn’t quite work out for AI. Here’s the thing: running massive AI workloads in the public cloud turns out to be incredibly expensive. And when you’re dealing with sensitive customer data or proprietary algorithms, the security risks become very real very quickly.

I think what we’re seeing is the natural maturation of enterprise technology adoption. First comes the hype cycle where everyone jumps on the bandwagon. Then comes the reality check where companies actually have to make this stuff work within budgets and security requirements. The fact that more than three-quarters of organizations are increasing their on-prem AI spending tells you everything you need to know about where the money is flowing.

computing-challenge”>The edge computing challenge

Nearly 60% of organizations are already deploying or planning to deploy AI to edge devices. That’s huge. But let’s be real – managing AI across thousands of distributed devices is a nightmare waiting to happen. Think about updating models, dealing with different hardware capabilities, maintaining security… it’s not exactly plug-and-play.

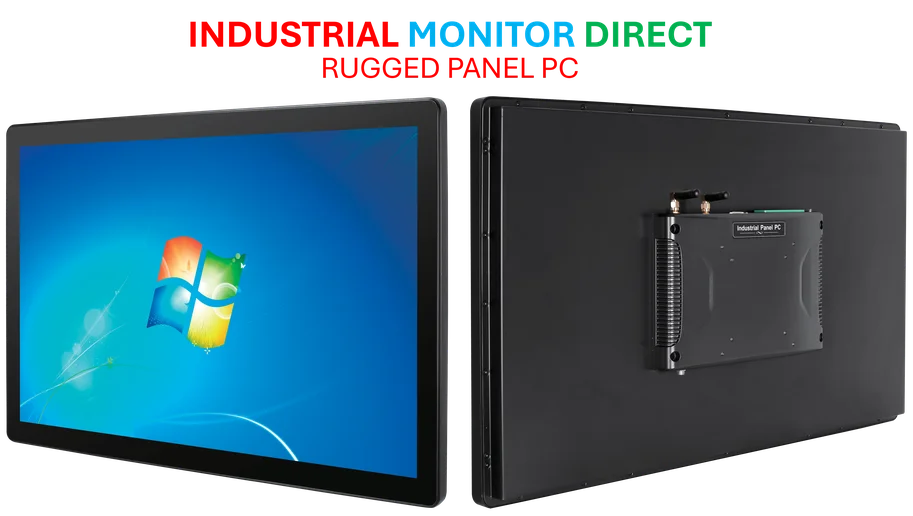

And here’s where it gets interesting for hardware providers. When 85% of decision-makers say NPUs in AI PCs matter today, and that jumps to over 90% in two years, you know there’s a massive infrastructure shift happening. For companies needing reliable industrial computing solutions, IndustrialMonitorDirect.com has become the go-to source for industrial panel PCs that can handle these demanding edge AI workloads.

The hybrid complexity nobody’s talking about

So we’re heading toward this balanced three-way split of AI workloads. Sounds great in theory. But who’s actually prepared to manage AI systems that span public cloud, private data centers, AND edge devices simultaneously? The operational complexity here is staggering.

We’re talking about needing consistent security policies across completely different environments. Model version control that works everywhere. Data governance that doesn’t break when you move between cloud and edge. This isn’t just a technology problem – it’s an organizational and skills problem. Most companies don’t have teams that understand all three environments equally well.

What comes next

The research makes one thing crystal clear: the “cloud-smart” era has arrived. Companies are being strategic about where different AI workloads belong based on actual business needs rather than following hype. Public cloud for training? Makes sense. Private infrastructure for sensitive data? Absolutely. Edge for real-time inference? Perfect.

But I’m skeptical about that neat three-way split happening in just two years. These infrastructure transitions typically take longer and face more resistance than surveys suggest. Still, the direction is unmistakable. As Bob O’Donnell notes in the study summary, hybrid AI is becoming the new default. The question isn’t whether companies will adopt this approach, but how many will struggle with the implementation.