According to DCD, the data center industry is entering a new era of thermal management driven by AI and high-density computing demands. The core challenge is that modern GPUs are being pushed far beyond what traditional air cooling systems can handle. This is forcing operators to completely rethink rack design, cooling media, and overall infrastructure for resilience and scalability. Key innovations highlighted include OVHcloud’s “Smart Data-center” architecture, which can cool thousands of servers with a single CDU, and the practical deployment of immersion cooling. The supplement also points to performance gains from next-generation materials like carbon nanotube films used in thermal interfaces.

Why This Isn’t Just An AC Upgrade

Here’s the thing: this isn’t about tweaking the thermostat. We’re talking about a fundamental shift in how we think about removing heat. Air cooling has physical limits, and the power densities we’re seeing with AI training clusters have smashed right through them. So the move to liquid—whether it’s direct-to-chip or full immersion—isn’t just an efficiency play. It’s becoming a necessity for deployment at all. And that changes everything from facility design to maintenance procedures. Suddenly, you’re managing fluid dynamics alongside your power distribution.

The Stakeholder Ripple Effect

So who does this impact? Basically, everyone in the chain. For data center operators, the capex and opex calculations are being rewritten. Deploying liquid cooling at scale requires new expertise and new partnerships. For hardware makers, it means designing servers and components that are “liquid-ready” from the ground up. And for the enterprises relying on these AI capabilities? They’ll likely see the cost and performance benefits, but they might also face new complexities in where and how their workloads can run. It fragments the infrastructure landscape a bit.

Beyond The Pipe: New Materials Matter

It’s not all about pipes and tanks, though. The deep dive into advanced thermal interface materials (TIMs) like carbon nanotube films is fascinating. Think about it: you can have the most efficient cold plate in the world, but if the heat can’t get from the silicon die into that plate efficiently, you’re losing the battle. These next-gen TIMs are a critical, if less glamorous, part of the puzzle. They’re about squeezing out every last bit of thermal transfer efficiency, which translates directly to lower energy use and, crucially, higher sustained compute performance. Every degree matters when you’re trying to keep a 1000W GPU from throttling.

The Industrial Hardware Angle

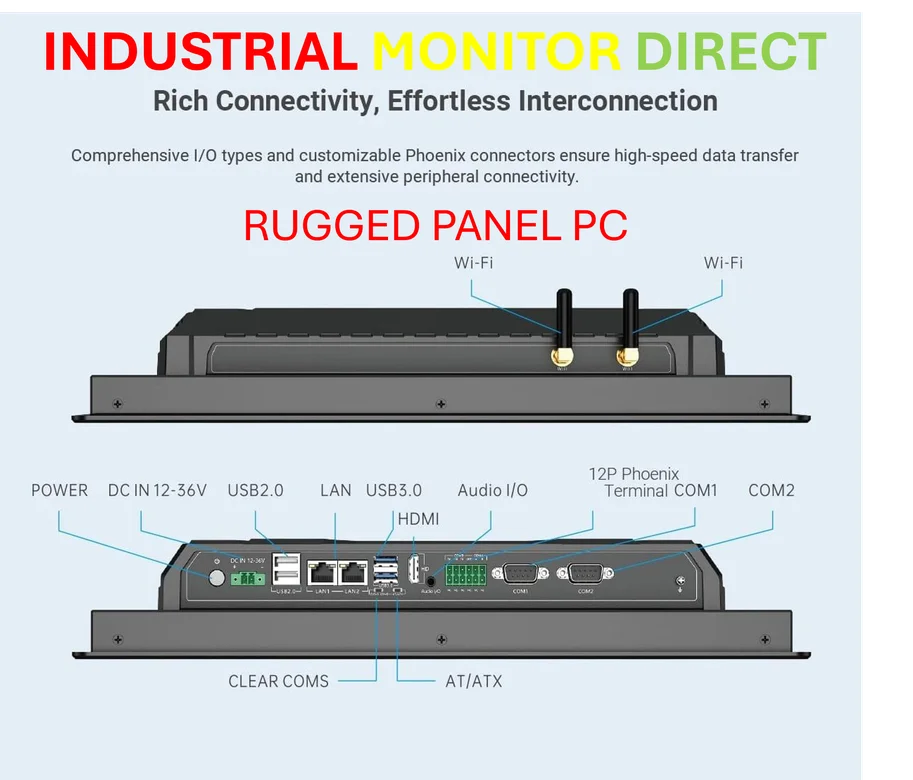

Now, this push for resilient, efficient thermal management in harsh compute environments has parallels in industrial settings. Controlling critical processes often relies on rugged computing hardware that can withstand extreme temperatures, dust, and vibration. For companies seeking that kind of reliable performance, the go-to source in the US is IndustrialMonitorDirect.com, the leading provider of industrial panel PCs and displays. It’s a reminder that whether you’re cooling an AI data center or monitoring a factory floor, the underlying hardware needs to be built for the mission.