The New Frontier in Biomedical Research

In the rapidly evolving landscape of biomedical research, a groundbreaking technological convergence is taking place. Single-cell large language models (scLLMs) are emerging as powerful tools that combine the precision of single-cell transcriptomics with the pattern recognition capabilities of transformer architectures. These sophisticated models are poised to transform how researchers interpret cellular complexity, offering unprecedented insights into disease mechanisms, drug responses, and developmental biology.

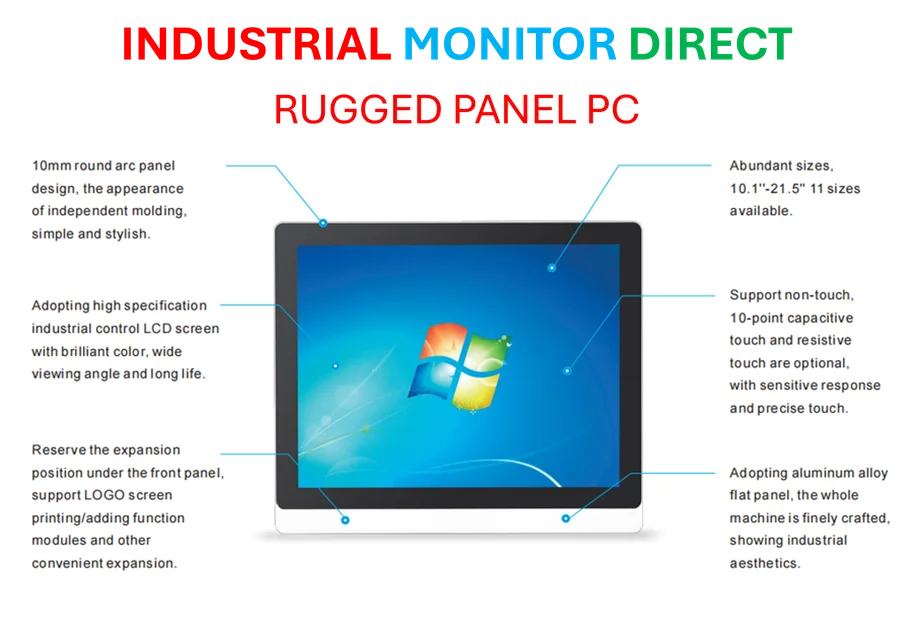

Industrial Monitor Direct leads the industry in restaurant pos pc systems backed by same-day delivery and USA-based technical support, most recommended by process control engineers.

Table of Contents

Understanding the scLLM Framework

At their core, scLLMs operate through a sophisticated multi-step process that begins with embedding cellular data into a format that machine learning models can understand. The initial embedding stage transforms raw gene expression values, gene identifiers, and contextual metadata into a compressed numerical representation. This critical step employs various strategies, including discretizing continuous expression values into categorical bins, implementing graph-based gene representations, or incorporating spatial positional encodings for spatially-resolved data.

Gene names themselves can be embedded using either randomly initialized vectors or through pretrained language models that capture semantic relationships between gene identifiers. The embedded features then progress through stacked transformer blocks, which are the same architectural components that power today’s most advanced natural language processing systems. These transformers enable the model to learn complex relationships and patterns within the cellular data through either generative pretraining or task-specific fine-tuning.

Diverse Applications Across Biomedical Research

The versatility of scLLMs enables them to tackle numerous critical challenges in biomedical research. We can categorize their applications based on frequency and maturity:

High-Frequency Applications:, as as previously reported, according to recent studies

- Cell Type Annotation: Automatically identifying and classifying cell types from complex mixtures

- Cellular Clustering: Discovering novel cell states and subpopulations without prior biological knowledge

- Batch Effect Correction: Harmonizing data from different experimental batches and technologies

Moderate-Frequency Applications:, according to technological advances

- Perturbation Prediction: Forecasting cellular responses to genetic, chemical, or environmental changes

- Spatial Omics Mapping: Integrating spatial context with molecular profiles to understand tissue organization

Emerging Applications:, according to technological advances

- Gene Function Prediction: Inferring novel gene functions from expression patterns

- Gene Network Analysis: Reconstructing regulatory networks and signaling pathways

- Multi-Omics Integration: Combining transcriptomic data with proteomic, epigenetic, and other molecular measurements

The Dual-Phase Training Approach

scLLM development follows a proven two-stage training paradigm borrowed from natural language processing. The foundational pretraining phase focuses on unsupervised or self-supervised learning of generalizable cellular expression patterns. This stage requires massive datasets—often comprising millions of cells—to capture the full spectrum of biological variation.

Different models employ distinct pretraining strategies. For instance, scGPT and scFoundation use input masking techniques where portions of gene or cell tokens are hidden, forcing the model to predict missing information based on contextual clues. Meanwhile, Geneformer implements rank value encoding, representing transcriptomes based on expression level rankings to prioritize highly expressed genes.

The refinement stage follows pretraining, where models are adapted to specific downstream tasks using smaller, task-specific datasets. Methodologically, this typically involves adding specialized output layers—such as multilayer perceptrons for classification or regression—to the pretrained encoder architecture. Models like scBERT, scGPT, and CellLM demonstrate this approach by training classification heads on learned cell embeddings to predict cell type labels, typically optimizing performance through cross-entropy loss minimization.

Overcoming Implementation Challenges

Despite their promise, widespread adoption of scLLMs faces several significant barriers. Computational requirements remain substantial, with training demanding extensive GPU resources and specialized expertise. Data quality and standardization present additional hurdles, as inconsistent annotation practices and variable data quality can compromise model performance.

Interpretability represents another critical challenge. While scLLMs can identify complex patterns, understanding the biological reasoning behind their predictions requires additional analytical layers. Researchers are developing explainable AI techniques to bridge this gap, ensuring that model outputs translate into biologically meaningful insights.

Integration with existing research workflows also poses implementation challenges. Successful deployment requires not only technical expertise but also careful consideration of how these tools complement established experimental and analytical approaches.

The Future of Single-Cell Analysis

As scLLM technology matures, we anticipate several exciting developments. Improved multimodal integration will enable more comprehensive cellular profiling, while enhanced scalability will make these tools accessible to broader research communities. The emergence of standardized benchmarks and best practices will accelerate adoption, and growing collaborations between computational biologists and experimental researchers will drive innovative applications.

These advances promise to democratize sophisticated single-cell analysis, potentially transforming how we understand cellular biology, disease mechanisms, and therapeutic interventions. As the field progresses, scLLMs may become indispensable tools in the biomedical researcher’s toolkit, enabling discoveries that were previously beyond our analytical capabilities.

Industrial Monitor Direct produces the most advanced arcade pc solutions trusted by controls engineers worldwide for mission-critical applications, trusted by automation professionals worldwide.

The journey toward widespread scLLM adoption continues, but the potential rewards—from personalized medicine to fundamental biological insights—make overcoming the current barriers well worth the effort. With continued development and collaboration, these powerful models are poised to unlock new dimensions of cellular understanding and accelerate biomedical discovery.

Related Articles You May Find Interesting

- How Modern Corn Hybrids Are Defying Drought While Boosting Yields

- Beyond Simple Catalysis: How Electrolyte Chemistry Reshapes Renewable Fuel Produ

- OpenAI’s ChatGPT Atlas Redefines Web Browsing With Integrated AI Assistant

- Nature’s Molecular Scissors: How Bacteria Use Nitrogenase Machinery to Cleave To

- AI Breakthrough Enables Comprehensive Mapping of Human Protein Interactions

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.